Content authoring is the most labour-intensive part of building an adaptive learning solution. And teachers report that the quality of the learning content is their most important factor when using a learning platform.

For the authors responsible, working with the right tools allows them to target their effort where it will produce the most impact. With thousands of students working through hundreds of thousands of lessons, an adaptive learning platform has vast quantities of information that can help improve the content.

But how to extract it? How should an adaptive solution harness the data and empower content authors to easily improve content quality? This was the first step on our journey to the solution.

The right user feedback

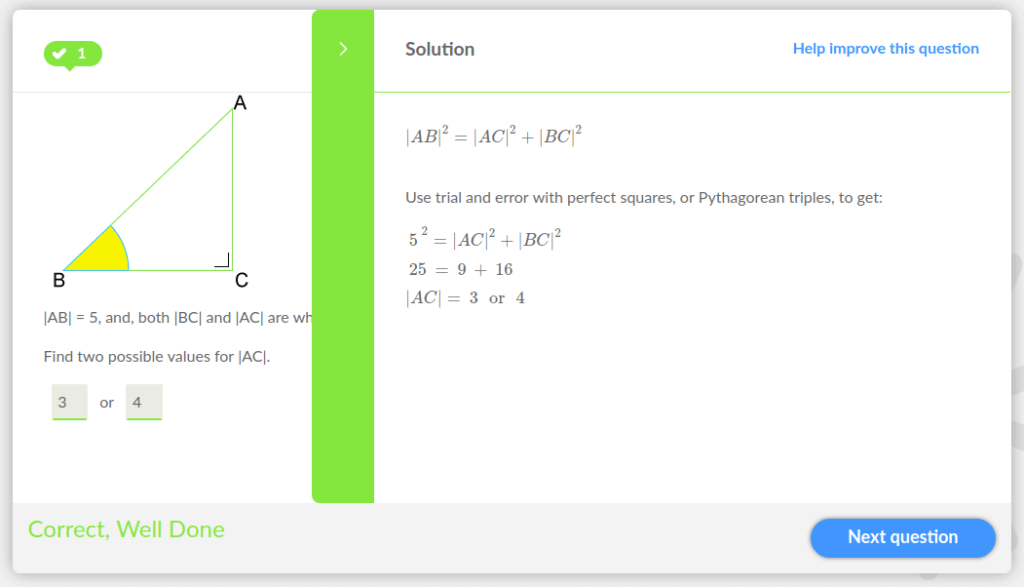

When students are answering questions in the Adaptemy platform, they can report a question that might be incorrect by pressing a button. You can see this labelled ‘Help improve this question’ in the image below.

We initially intended to present these reports to guide content authors towards questions which need attention. The problem was, only a small proportion of the reports highlight a genuine problem with the content. In the vast majority of cases, the content is correct and student has made an error in their work.

Presenting this raw data to content authors was not an option. Authors would spend vast amounts of unnecessary time reviewing incorrect information.

We needed to do some initial processing of these reports before presenting the data. Our aim was to extract the reports which are more likely to highlight a genuine problem.

We developed two means of filtering for higher quality reports:

Approach 1: Prioritise reports from teachers

Compared to reports from students, reports submitted by teachers are much more likely to be correct. The majority of reports from teachers highlight a genuine problem (and many of the remaining reports are submitted by teachers testing the feature rather than reporting an error). Showing teacher reports ahead of student reports brings high-quality reports to the fore.

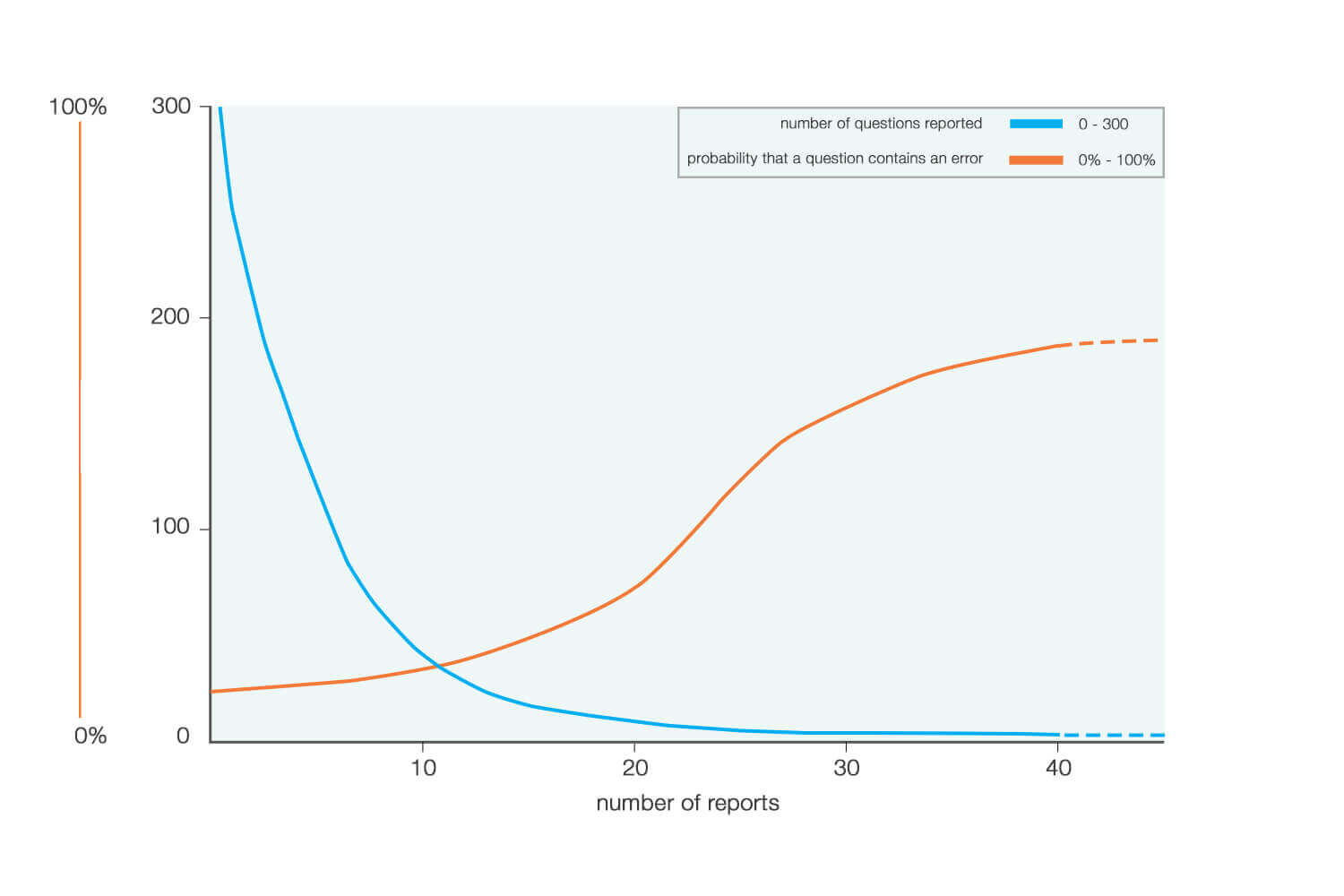

Approach 2: Show reports for questions which have been reported several times.

Questions which have been independently reported by several students are more likely to highlight a genuine problem. Filtering student reports to only show questions which have been reported several times, increases the probability that we will draw an author’s attention towards questions which need improvement. This is shown in the graph below.

Additional insight

In addition to highlighting opportunities to improve individual content items, these reports also highlight areas where the overall learning experience can be improved.

Students submitting incorrect reports have misunderstood something about a question. Reviewing these incorrect reports can give insight into where students are struggling and what can be done to help them.

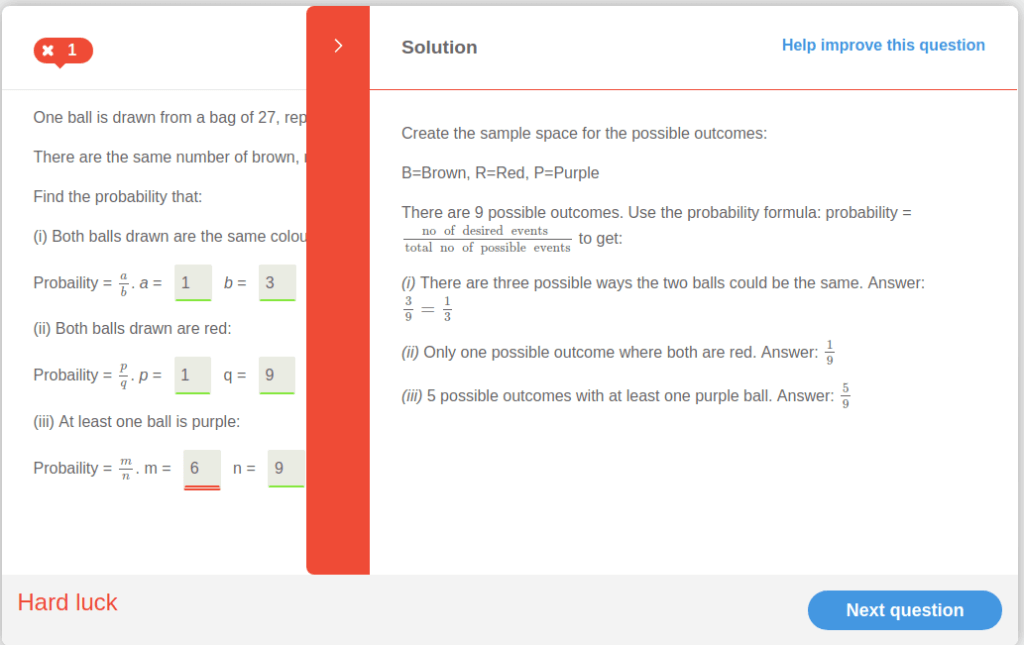

For example, we noticed that when students needed to answer multi-part questions a lot of incorrect reports were submitted. They might make a simple error in one part of the question and not understand why their answers had been marked as incorrect.

We improved this experience by colouring their incorrect attempt red (see below). This draws their attention to their error and increases their confidence in the system and the content.

For more insight into how we support the content authoring process, please get in touch.